Introduction to Technology Bundle

Technology resources for deafblind individuals.

In 2020, individuals from the reference group consistently highlighted to Deafblind Australia the priority and importance of training in the use of technology.

DBA is appreciative of the feedback from the 2020 reference group and since then has been working to provide technology training.

What kind of training are we providing?

Firstly, one on one, in person training, training facilitated online, and videos for individuals to watch themselves.

The training videos will cover many different aspects of technology.

We hope that you will enjoy learning many new things from these training videos.

This project is funded by the Australian Government Department of Social Services go to dss.gov.au

Technology Overview

Description: Title appears “Deafblind Australia presents: Deafblind Assistive Technology Overview”. Ben appears with glasses and a beard against a black background, signing in Auslan. Throughout the video, he points to photos of various devices displayed in the top right corner.

“Hi, this video is about deafblind assistive technology.

Deafblind Australia has been working on a project, planning to roll out workshops all over the country but, unfortunately, with the emergence of the Covid-19 pandemic, many of these workshops had to be cancelled.

We’ve also heard from deafblind community members that online workshop platforms such as ‘Zoom,’ don’t really work very well for the deafblind community when discussing topics like technology. So given the situation, we decided to postpone the workshops and focus instead on producing this video resource covering information about the variety of assisted technology that is available in Australia.

This video will cover a range of technology that is available from a specialist business named Quantum. Quantum’s business is focused specifically on low vision and braille technology. They have offices in Queensland, New South Wales, Victoria, and South Australia. For those living in W.A or Tasmania, similar devices to what is covered in this video are available from a business named Vis-Ability. Vision Australia also sells the products covered in this video throughout the country.

If, while you’re watching this video you see a specific device that you’re really interested in, you can contact Quantum to arrange for a visit to your home or workplace, possibly with an occupational therapist, in order to assess which technology will best support your access.

What is assistive technology?

Assistive technology is a very broad term. It can mean anything from simple technologies like a magnifying glass right through to sophisticated technology like a self- contained braille computer. Both of these devices are examples of assistive technology.

Assistive technology can also mean software that is added to a computer, or tablet device, to allow for magnification voiceover, or braille connectivity. Deafblind people primarily use three types of software for their accessibility.

- The 1st is a magnification software,

- the 2nd is screen reading software and

- the 3rd is braille output software.

Sometimes people will use a combination of all three.

This video will cover a variety of technology, and if there’s any devices that you’re interested in learning more about, you’ll find contact information at the end of the video.

Magnification

Magnifiers such as the one in the picture, are simple and easy to use.

(Generic image of magnifying glass)

Portable.

You can put it in your bag and take it with you to the shops.

They’re great to help people read things either while shopping or in the home. For example, there’s a variety of magnifiers available, and it depends on how strong you need the magnification to be. There’s mild magnification, and very strong magnification. You could also have either, the traditional type of magnifier, or a stand electronic magnifier which can be used for reading documents. Both are available.

(Generic image of a stand electronic magnifier)

Simple magnifiers, like the ones pictured, can provide up to 12 times magnification.

Digital magnifiers look similar to an iPad or a tablet.

(Generic image of an iPad)

It’s a square device that can be pointed around to magnify things in the environment. Because these devices are digital, they’re capable of very strong, very intense magnification.

Some also have added functionalities, such as the ability to change background colour [and] to change font colours. These are available on some devices.

Some digital magnifiers also have a separate camera that can be used to see things at distance and display them on the screen. It’s great for looking at an interpreter at distance for example, or if you’re doing something like applying make-up, it can make it much easier to see what you’re doing.

Some magnifiers have screen reading technology built into them. So, for example, if I was trying to read some text that I couldn’t really see, I could take a photo of it with my magnifier, and then have it read the text out to me. That voice over technology is also able to be streamed into a cochlea implant or a hearing aid.

This device is called an ACE sight.

(Generic image of product)

It has a camera mounted in a headset that allows us to have a very expanded, magnified view, right in our frame of vision.

It’s great for watching sport, or theatre, or an interpreter in a conference, or forum type environment.

The picture can also be modified on this device. You can control how strong the magnification is, as well as things like colour contrast as well.

Computer software

When deafblind people work on computers, they often need the screen to be magnified, and there’s a couple of ways that this can be done.

The 1st is using the inbuilt features of the device itself, and the other way is to download separate software and install it on the computer. In terms of which program is right for you, it depends on whether you’re looking for magnification, screen reading, or a combination of both.

Some popular software in the deafblind community includes

- ZoomText

- JAWS

- Fusion

- NVDA

‘ZoomText,’ is a very popular program because it includes both magnification software, and screen reading software.

It provides up to 7 times zoom, without distorting or changing the font, so the writing remains clear, and easy to read, even under strong magnification.

When using ZoomText, you can move around and navigate the computer with either the mouse or the keyboard.

It’s very easy to use. ZoomText, is also great because when you’re typing under strong magnification, it stays centred on the cursor, helping you to remain oriented on the page, and not get confused as to where you are on the screen.

Sometimes ZoomText, or a program like it, doesn’t provide enough magnification, or you might be using a laptop where the screen itself is small.

Another option for magnifying the image is, to use a second monitor and connect it to the computer through HDMI.

In this way both the computer, and the monitor will display the same information, but the monitor will be greatly magnified. A setup like this is great for participating in Zoom meetings, or watching anything that’s being interpreted online.

Braille

There are a variety of braille devices out there, and most of them can be connected to a smartphone, or tablet via Bluetooth technology. Once connected this means that the user has full control over the device, using only the braille display. Braille has several benefits.

The 1st is that it is a quiet, and totally private way of receiving information. You can be reading your braille in public and no one is aware of what the information is that you’re taking in.

If you’re doing public speaking, or giving a speech anywhere, having your prompts, and your notes in braille is a great way to check where you’re up to, to make sure you don’t miss any information, all without having to stop or interrupt your speech. For people who are fully deafblind, braille skills mean that they can retain their access to information and communication with the wider community.

We’ve talked a little bit about pairing braille displays with smartphones and tablets, but there is another device that gives all the benefits of pairing a braille display with a computer without the computer.

This device is called an ElBraille, and it is a fully functional laptop computer all housed within the construction of this braille display.

(Generic image of an ElBraille)

It’s the same size as just a braille display, but has the full functionality of a laptop computer.

More information:

Quantum Reading Learning Vision

This project is funded by the Australian Government Department of Social Services go to dss.gov.au

BrailleNote

Description: Vanessa, with long hair, appears on the right side of the screen, discussing her BrailleNote. On the left, Ben, an Auslan interpreter with a beard and glasses, signs in Auslan.

(Screenshot of interpreter on left and presenter on right)

“Hi, my name is Vanessa Vlajkov from Perth.

The main communication I use is called a BrailleNote.

It has changed over years to upgrade over time, but mainly it’s still the same looking machine, only it’s gotten a bit fancier over the years.

I started learning Braille when I was four, but I didn’t get my first BrailleNote until I was, seven. I think I was given BrailleNote from the Education Department because as I was in School, I didn’t need to buy myself one. So, I was provided with one from school, until I graduated, and then I used funding to buy my own.

They come from an organization called HumanWare. With my BrailleNote which is called a BrailleNote Touch Plus, the latest version, I do everything from texting to emailing, social media, Uni assignments and everything else you can think of it. It has a connection through Bluetooth with my phone, and can also be connected to iPad or other devices.

So, when it’s connected, I use it as a display so everything that appears on the ‘i-device’ appears on the Braille for me.

So that’s how I communicate with the world.

When it doesn’t work there is no world for me.”

This project is funded by the Australian Government Department of Social Services go to dss.gov.au

Roger Pen

Description: Jennifer appears on the right side of the screen, wearing a red jumper and discussing her Roger pen. On the left, Ben, an Auslan interpreter, signs in Auslan.

(Screenshot of Jennifer in home office)

“Good morning my name is Jennifer Weir.

I am vision and hearing impaired from Usher Syndrome.

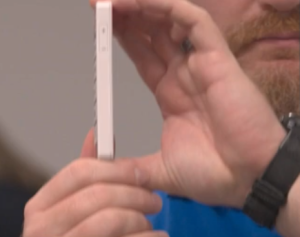

I’m going to talk today about the Roger Pen which is up here on my computer.

What is a Roger Pen?

(Image from hearingchoices.com.au)

A Roger Pen is a device that, using FM and Bluetooth technology, will transfer sound from a television, computer, a radio or even a conversation, directly into my cochlear and hearing aid.

I received my Roger Pen after having a cochlear implanted, and a new hearing aid, and I required to have good streaming capability, due to my volunteer work and lifestyle. It was about 15 months ago.

The first thing I use my Roger Pen mostly for, is using it with my computer. It streams the conversation from a screen reader directly into my hearing aid and cochlear. Which makes it much easier for me to be able to do work as I can’t see very well.

The next thing I use my Roger Pen for is listening to the television and the radio. It streams directly into my cochlear and hearing aid. I can control the sound and the volume and it also enables me to turn the sound down to zero while everybody is doing something else, and doesn’t want to watch, or listen to what I’m listening to. It’s great.

The other thing I use the Roger Pen for is going to board meetings and any other form of meeting. I turn the microphone on, I put it on the table in the direction of where the conversation is coming from and the Roger Pen will pick that conversation up and stream it directly into my cochlear and hearing aid.

It will also switch to the next person that starts speaking, in other words the next loudest voice.

It works most of the time.

It’s very, very handy as meetings can be quite difficult, at times.”

[Jennifer demonstrates the use of a Roger Pen in receiving instructions from her golf coach, while hitting a golf ball]

(Screenshot of Jennifer

hitting a golf ball)

[Golf caddy speaks]

“Okay Jennifer, address the ball.

Close the club slightly,

bit more…

bit more…

fraction more…

that will go,

fine.”

“I just hit a ball, and my caddy has given me directions directly through the Roger Pen to my hearing aid.

He’s standing quite a distance away for safety reasons, so I use this when I’m playing golf.

The Roger Pen is also useful when having one-to-one conversations with other people who use Roger Pens and are extremely hard of hearing. This is great in a social situation because you can be having a quiet conversation by your Roger pen with someone else and understand what is being said.

I hope you have enjoyed looking at this video.

For further information on the Roger Pen just Google Roger Pen Australia there’s information on that site.

Thank you”

More info: www.phonak.com/au

This project is funded by the Australian Government Department of Social Services go to dss.gov.au

Accessible Technology: Deafblind Awareness

Description: Paola appears on the left side of the screen, wearing a dark blue jumper and a long side plait, giving a speech about Deafblindness. On the right side, a PowerPoint presentation is linked to her speech.

(Screenshot of presenter on left and information on right)

“Hello everybody. My name is Pala Avila, this is my sign name. I am a deafblind woman. I’d like to just start by extending my thanks to DBA for inviting me to be here and present today, and to present about my experience as a deafblind person.

I think it’s really valuable for people to hear the deafblind perspective, and I think this is a great opportunity for everyone in the workshop to understand not only what deafblindness is, but how to work most effectively with deafblind people, especially online. So, the objectives of my discussion today are outlined here;

Objectives

1, What does deafblind mean?

2, Different vision types for deafblind people.

3, Technology

4, Questions/Answers

Initially I’d like to just take us through a definition of deafblindness, and then I’ll show some examples of the various eye conditions that deafblind people may have, and that’ll really help with your understanding, because I can sit here and talk about this stuff in theory as long as I want, but it’s not as impactful as showing you those sorts of images. I’m also going to talk about technology as we know everyone is obsessed with technology these days, and that definitely extends to deafblind people, but some pieces of technology are really difficult, but technology can be a great way for people to overcome barriers too. So, we’ll talk about that, and finally we’ll have some time for questions and answers at the end.

Outcome

By the end of this presentation, you will be able to understand more about deafblindness, the communication methods and how better to work with us using technology.

So, the outcome for today’s workshop.

After the presentations today, I’m hoping that you’ll have an improved understanding of what deafblindness is, and the different ways you can communicate with deafblind people, and I also hope you’ll have a better understanding of how to work effectively with deafblind people online, using some different pieces of technology.

These two words deaf and blind. I’ll talk about them separately.

First of all, deafness and blindness.

“Deaf” in the dictionary

-Hearing loss -half deaf -difficult hear

-Profoundly deaf -deafness -hearing impaired

-Hard of hearing -fully deaf -Deaf or deaf

-Oral -severe hearing loss, etc

Deafness, as it is defined in a dictionary, is quite extensive in its definition. A lot of it might use terms such as; hearing loss, or somebody being profoundly deaf, hard of hearing, half deaf, somebody might have deafness or they might be described as being fully deaf, difficulty hearing, a hearing impairment.

You might see ‘deaf’ written with both a small ‘d’ and a capital ‘D’. There’s many, many varieties of hearing loss. All of these sort of related concepts fall under the idea of what we term “deaf.”

“Blind” in the dictionary

Unable to see because of disease or a congenital condition or injury.

-vision loss -difficult seeing -blindness

-totally blind -vision impaired -unsighted

We find a similar scenario when we talk about blindness, and the dictionary definition of it. There’s a lot of different terminology associated with the concept of blindness. You might see things like: impossible to see, or congenital conditions, injuries related to vision.

You also might see terms like, vision impairment, is also a common phrase as is blindness. You might call someone, un-sighted. So, there’s a whole bunch of terminology that falls under “blind.”

We need to then combine these concepts of deafness and blindness, and they can’t really be separated in the deafblind context, but I’ll unpack that more in a minute.

The “hearing world,” (or what we would term “hearing people”).

We often find that hearing people who go blind, have a very different cultural experience of that, than a deafblind person would. The experience of a deafblind person is, that their deafness and their blindness cannot be separated. It is a singular and unique disability.

This can become quite problematic when somebody is becoming deafblind, because they have to do quite a lot of learning, relating to how they’re going to communicate once their senses have declined. I’ll go into that in a bit more

depth later.

Common words used in the Deafblind community;-

-DeafBlind (DB)

-Usher Syndrome (Usher)

-Retina Pigmentosa (RP)

Most of you in the room would be familiar with the term deafblind, I’m sure, but within that community, there’s some terms that you will see quite frequently. The first is, deafblind being shortened to just “DB,” an initialization. You also hear the term “Usher,” or Usher syndrome, and lastly Retina Pigmentosa, which is often shorten to “RP.”

These are the most commonly used terms relating to deafblindness.

What does ‘Usher’s Syndrome’ mean?

I’ll speak more specifically about Usher Syndrome now, (which is the condition that I have). Usher Syndrome refers to a person who has a vision impairment that manifests as tunnel vision, but I must say at this point that, this is not uniform for everyone with Usher Syndrome. Some people

have quite a large frame of vision, for others it’s significantly reduced. Some people may see tunnel vision, or spots on their vision.

It’s widely varied, but the term ‘Usher Syndrome,’ as well as a diagnostic category, is also a way that people define themselves, and see, self-identify in the deafblind community. You don’t really see that, as much, in the hearing world, so Usher is just a diagnosis there, but for deafblind people it can be an identity as well.

Retina Pigmentatosa (RP)

Retina Pigmentatosa is another word used for ‘Usher Syndrome’ and it is often used by medical professionals.

Retina Pigmentosa or “RP,” can sort of be used interchangeably with Usher Syndrome, but most people choose to use the term “Usher” for their identity, although they might have been diagnosed as having Retina Pigmentosa in the past. “RP,” is quite a medical term, some people do still use it, but most people who have Retina Pigmentosa would self-identify especially here in Victoria as having Usher syndrome.

(Screenshot of slide)

This slide unpacks the 3 different types of Usher Syndrome. Here in Australia, we have these 3 types, the most common,

(I believe there’s up to like 21 different classifications of Usher worldwide), but I’m going to talk about these 3 here, because, they’re the most commonly seen in Australia.

Myself, I have Usher Syndrome Type 1. My partner who also has Usher Syndrome, he has Type 2. So, we both have Usher but quite different types. For people with,

- Type 1 Usher Syndrome, it usually refers to people who lose their hearing at quite an early age, or are born deaf and then their sight begins to degenerate over the course of years throughout their life. For some people their impairment reaches a point where it stops and for others the loss is ongoing. There’s also, often, impacts to people’s balance when they have Usher 1 and that’s related to their hearing.

- The 2ND Type, (which as I mentioned my partner has) Usher Type 2 refers to people who don’t develop their hearing loss until roughly their late teenage years, and they also start to experience losses to their vision at about that time. They don’t tend to have impacts to their balance because of the late onset of their hearing loss. If the hearing loss is sudden and complete then that will impact their balance. Both Type 1 and 2 Usher’s Syndrome give people issues with night blindness as well.

- Terms of Type 3, this refers to someone who goes through their life in a largely unimpaired manner, and at some point, during their adulthood, maybe their late 20’s, they experience a sudden or very rapid loss in vision and hearing. This can be very difficult because often when those losses occur, the person has no communication skills, or adaptive technology like hearing aids, so it’s a big change all at once. We really encourage everyone with Type 3 Ushers to learn sign language as soon as possible in order to keep up their communication.

We often find people with Usher Syndrome Type 2 are quite strong oral communicators, and they come to learning Auslan and sign language later in life. Some people are interested in learning it, some people tend to put it off. For me, I was diagnosed at 7 and I feel like I really should have started learning to use braille early in my life, but I put it off, I put it off, and now that I look back, I think I might be a bit past my ability to learn it easily, so I wish I’d learnt braille sooner.

Just to backtrack a little about my personal story. I was diagnosed as deaf when I was 18 months old and then at 7 years of age, I started to experience issues with my vision and I started to lose my vision in chunks, sort of every seven years. My right eye at this point is pretty much fully blind, and that’s due to macular degeneration in my right eye. It’s like I have a black fog over my whole field of vision.

My left eye does still have some sight in it. I have cataracts which is very, very, common for people with Usher Syndrome. I know there is a surgery you can have to remove cataracts, but I don’t know how helpful it would be for me, and it could actually make some things worse, or at least that’s what I’ve heard. I know that cataracts are quite common in the hearing world, and that people have that surgery and it doesn’t cause them many problems, but I have heard of stories of people with Usher Syndrome having their cataracts removed and experiencing some negative outcomes from that, so I’m a bit hesitant. As I said I’ve got the black fog in my right eye, but then in the cataract eye, my left eye, it’s a lighter colour fog.

You can see here the two different diagrams. On the left, we have a normal functioning eye, so most people here, you can see the back of the retina, this is everything functioning normally in that first picture. On the right, this is similar to someone with Retina Pigmentosa or Ushers. You can see the pigments there, and how that can block the vision from functioning.

Again, on the left we have a photo of a back of a normal retina, and in the photo on the right, you can see all the black splotches there. It may start with only a few of these splotches, and then over a number of years, the splotches spread, and if there’s too many of these, particularly around the centre, then that can cause total blindness.

It’s quite sad that there is no surgical intervention possible for this. I know that there is research in this area happening, at the moment nobody knows how to approach Retinitis Pigmentosa. They’re thinking that it might have to be a gene therapy or something targeting DNA, but I don’t want to go too deep into that now, but as it stands, no surgical intervention. We hold out hope that people may find a cure, but it is just hope.

(Screenshot of slide)

I’m going to show some images now that sort of replicate different kinds of vision that deafblind people may have, depending on their condition. This sort of replicates how people normally see. We’ve got a building, we can see the restaurant there, we can see the sunshine, we can see the little bits of shade from the buildings and shadows. It’s quite a nuanced image.

(Screenshot of slide)

RP Vision.

This photo, you can see the dark and black splotches around the exterior of the image. This is how most people with Usher syndrome, or Retina Pigmentosa see the world, so you can’t see all the buildings as we could before. There’s some areas of white grey, and black fog, around the outside of the image. Some might be small spots, some might be very thick sections, can also be different shapes obscuring people’s vision. It looks a bit like a tube. Some people, their vision is a bit like looking through a drinking straw.

Remember, not everybody with Usher Syndrome has the same vision, and this brings up an interesting point. Anytime you meet someone with a syndrome, or a deafblind person, don’t assume that you know what they’re going to need. Some people need you to be close, to communicate. Some people need you to be further away. Some people need to hold your hands to track your signing. Some people will need you to just sign in a slightly elevated position, not in front of your face, which I’ll talk more about in a minute.

If I sign in front of my face, my skin is the same colour on my hands, on my face, and it can make it really difficult. Like if I hold numbers up like this, it’s hard to see my hand, unless I move it down, so that it’s in contrast against my clothes, and now it’s much easier to pick out. So, you don’t want to be signing up in front of your face.

For me as a person with Usher, my vision is pretty much one-dimensional. With normal vision, you would describe it as 3-dimensional. You can see contours in a floor, for example, or you can see textures, whether something’s wet, or shiny. You could see changes in elevation, you could see something like sand on a surface, there’s all that sort of detail in your vision.

For me it’s 1-dimensional. I can’t see if there’s a contour. I can’t see if something’s flat. It makes me quite nervous when I’m walking places, because I always assume that I’m going to know where the lines are, I’m going to know where the topography changes, and that’s not always the case.

So, this is something a lot of people encounter when they have Usher Syndrome. They can be quite tentative in their walking or use something like a white cane to get a bit more of that information about what’s going on in the environment around them.

You might remember I mentioned cataracts before and the white fog that comes with that.

(Screenshot of slide)

This picture is a good example of what that looks like. It’s quite blurry and there’s only a small portion of the picture that can be seen.

(Screenshot of slide)

Something that’s quite common for people with Usher or Retinitis Pigmentosa, and it’s certainly true for me, is issues with glare. A lot of people with Usher Syndrome wear sunglasses, specifically to counteract that glare, but it can be a huge barrier to communication.

If I want to talk with my friends or people in the community, if they’re standing with a window or something behind them, it’s impossible for me to communicate. The glare blows out into sort-of a white-out, and the person is just totally black. So, I can’t have anyone with a window at their back if they’re communicating with me. It poses a lot of problems in a car. For example, if I’m trying to talk to my partner, and he’s there and he’s got a window behind him, in those situations where the glare is really problematic, I will hold the person’s hands so that I can track at least where they are in the signing space and that can help me to follow what they’re saying.

(Screenshot of slide)

The other thing that we have problems with a lot, as people with Usher syndrome is, let’s say for instance, we’re in a restaurant, we’re eating and we’re having a great time and then when we go outside it’s very bright. The time taken to adjust from a dark environment, to a bright environment

can be, you know, 1 to 5 minutes depending on the person.

And the same is true when we reverse the situation, if you’ve been outside and then you go into a dark environment, it can take up to sort of 5 minutes, for your eyes to adjust to the new lighting environment.

(Screenshot of slide)

Then we have total blindness, just the absence of vision whatsoever. Some people can get to this level of blindness progressively over time. Some people are born with this level of vision right from the jump, so it’s very, very, varied. And I stress this again, that the Usher community and the deafblind community at large, is so varied. Many deafblind people also have issues with glaucoma macular degeneration. There’s a whole range of eye conditions that people might be living with. So, again this shows what I’m talking about. The time that it takes me to look up here and figure out what’s on each slide is the result of this very limited tunnel vision that I only have in my left eye. So, thank you for bearing with me.

(Screenshot of slide)

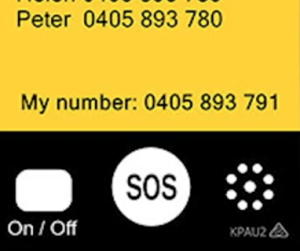

People in the deafblind community use lots of different apps to help them communicate in the community. I’ve listed some of the common ones here. The first one is-

Buzz card, I’ve got the photo up here, on the slide as well, so you can have this on your phone. It’s got the right contrast and thick text that I need, to be able to see it. This is really good for communicating with people out in the community, even if they have a problem seeing that, it might be an older person, this has got some nice big thick texts easy for everyone to read, and for me it’s easy for me to see it, as well, as we have our conversation back and forward. Deafblind people often use a darker or a different coloured background, not a white background, maybe orange or blue, but with a thick black text on it, that would be my preference, and a larger font size as well.

The second app on the list here is called-

My Ear

This is quite a new thing, but a lot of deafblind people are using it, as well as deaf people. The way this works is, let’s say you’re out somewhere, you’re getting the bus somewhere. If the door opens and the bus driver starts talking to you, I might not be able to understand them, but I can hold my phone up, and this app will automatically, like it would with close captions, convert that audio into text. It’s quite amazing. Then it also gives you the option to change font size, to change the background, and font colour. It’s a really amazing app, but it’s not entirely accurate.

There’s about, I would say, 20% of things that get missed, and that could be because the person has a strong accent, or it could be that there’s a lot of environmental noise that’s interfering with the app, but it’s much, much better than nothing. So that’s a really valuable app to have on hand as well.

FaceTime/Duo. FaceTime is a pretty common app that everybody has or, Duo is the other one, I think, that’s like Google’s version. Using video chat, most people in the deaf or deafblind community use that, but unfortunately this isn’t available for all deafblind people, and I’ll speak a bit more about why in a moment. The next app is-

Skype

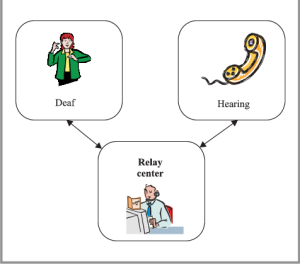

It’s been very, very common for a number of years now. Deafblind people use this often in place of the phone. If I needed to make a phone call to a hearing person, for example, I could use Skype to use the video remote interpreting service. I could give them the number, they’ll make the phone call, and then I can sign to them when they talk. The interpreter signs to me, when I sign the Interpreter speaks to the person on the end, so that was a way to get around making phone calls.

The other one I’ve got listed here is-

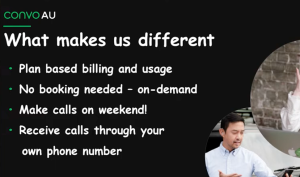

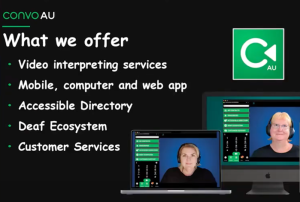

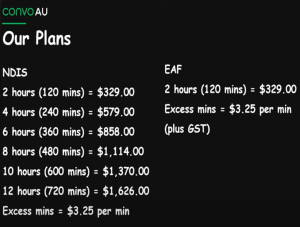

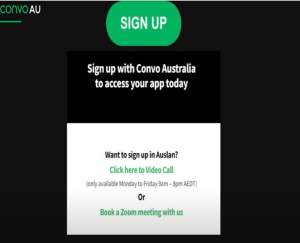

ConvoAu

This is similar to Skype, but it is a little bit different. Let’s say for example, I’m out at the shops and I need to speak to someone, I can use this app to pull up literally an interpreter out of my pocket, on my phone and I could say, “Hey, let this person know I need a haircut. I want my fringe trimmed,” and we can use this almost like an interpreter in your pocket.

This slide lists some other technology that deafblind people use. Everything up here is pretty common. You know iPhones captions,

iWatches

These watches and vibrating alarms are quite useful for doorbells and things. It’s great being able to use something like an Apple watch, where you can just get convenient alerts. You don’t need extra equipment, or to be looking at your door every 2 seconds to see if someone’s there. You can also use them for waking up, things like that.

Microphones and FM systems

Microphones and FM systems are quite common for people who are primarily oral communicators in the deafblind world. They use microphones to cut out some of the background noise. Again, people who are mostly oral communicators sometimes use FM systems, especially when they’re working on a computer, or if they’re do attend something online, they’ll have like a little pendant that they can connect, or a microphone they can give to a teacher, or someone leading a workshop, which then allows spoken words to come immediately into my listening device, and that cuts out a lot of the background noise, makes it a lot easier to follow.

Android apps and systems

Android apps and systems are quite common. People use computers and laptops, and they use vibrating alarms. So, something like a baby monitor, or a fire alarm, a doorbell. All sorts of different alert systems that use vibration.

Hearing aids/cockle implants are quite common and then,

braille reading devices as well.

These are some programs that deafblind people commonly have issues using the first one there is,

Zoom

Depending on how many people are on the zoom call, let’s say, for instance there’s 20 people in a zoom meeting, and we have an interpreter included among that list. Normally in meetings, we’ll have two interpreters, who’ll swap every 15 minutes. Often if I’m in a large Zoom meeting the Interpreter can be very small on the screen and very, very difficult to see.

Generally, if there’s only about 10 people, the screens aren’t as small, and they’re easier to see. We can pin the interpreter’s video, which will make it nice and big, and that’s fabulous, but when we do that, we’re then left out of the rest of the conversation. Just because we’re deafblind, doesn’t mean we don’t want to be involved, and see everyone else who’s in the room, same as you. You would want to feel part of the group, not just staring at the one screen all the time.

Another issue for people with Usher Syndrome using these sorts of programs, and I should just side-track here and say thank you to DBA for letting me be part of this program, I was involved in a DBA program that was meeting on Zoom and I’d never used that program before. It was very, very new to me.

When I got it set up, I could see what was going on, but tracking the icons on the screen, and the different buttons was very difficult. I had a CommGuide come and help me, I’ll speak more about what a communication guide is soon, but they showed me where things were in the program. They showed me how to download what I needed, how to pin a video, how to turn off my own video, how to turn the camera on and off, how to type in the chat. I had to sort of have a one-on-one tutorial and this was because the communication guide working with me had great Auslan skills and could explain everything I needed to do.

It was a great experience, having that person there to help me, and it allowed me to really use Zoom, and get involved, to the point where I’m quite comfortable using it myself now. But, again, Zoom is a problem for people who have lost significant amounts of vision or approaching total blindness.

In terms of someone at that point, navigating a program like Zoom independently, I don’t think that would be possible, they would need to have a Commguide with them. Also, if they can’t see what’s happening on the screen, they need an interpreter there, because we must remember the Commguide cannot take the place of an interpreter, and I’ll explain more about that later. Many people with Usher Syndrome or other types of deafblindness still have issues accessing Zoom, because of the same issues we have accessing Commguides and interpreters.

Skype is also quite a problematic app, similar to what I discussed with Zoom. Knowing where the icons are, how to navigate the screen, that can be really difficult. I personally have a bit of a hard time with Skype, and it’s because of the colour scheme. Everything’s white, and then the icons have like only a subtle change in colour.

Also, sometimes the interpreter that I’m accessing on Skype, won’t have a dark coloured background. That’s really important in order for me to be able to understand them well. If their background is bright, it just makes the whole thing unusable. Also, sometimes it’s difficult to understand a person’s particular signing style, if they’re interpreting for me on Skype. I can be locked out because of that.

FaceTime

I have used, and the success is dependent upon where I’m using it. If I use FaceTime when I’m outside, no good. If I’m in the house I’m in more of a controlled light environment, then it’s better, but again it depends on the Wi-Fi speed. If it’s too slow, it’s impossible to watch someone signing.

Microsoft teams

The biggest problem I have with Microsoft programs is, it’s just relentless and constantly updating. It feels like, every time you learn something, an update comes along, and then it changes, and then you have to wait for the Commguide to come, and show you how to navigate the app now that it’s changed.

Google Hangouts

This is another one that’s been quite problematic for me, just because I find the interface really hard to navigate visually. Most people with Usher Syndrome, most deafblind people have issues with these sorts of apps, with different colours, or with too much information on the screen, or interfaces that don’t make visual sense, or wrong -coloured backgrounds. Sometimes the text is just laid out in a way that’s very difficult for someone with a vision impairment to navigate.

There’s a lot of issues that can pop up. It’s also important to remember, that a lot of deafblind people have issues with English, because for most people, it’s their second language, and also people might be navigating the information in braille, which can become really extensive, and tiring if there’s too much information there. So, it’s important to keep things in short, sharp, chunks of plain English, wherever possible.

The difference between interpreters and Commguides is something that I alluded to before, and I’ll unpack that here.

So, for interpreters, like what Ben’s doing here, this is their job: transferring language from one language, into another.

The Communication Guide, or Commguide, can sometimes assist within formal interpreting, but they’re also responsible for physical guiding, for driving, transportation, taking people to different activities, helping them orient themselves to spaces, and make sure that they’re safe in an environment. The Commguide is also responsible for really becoming a person’s eyes, and ears, to ensure that they can be involved in the community in the way that they want to.

Deafblind people rely on Commguides a lot, and they can also support us with how to use different pieces of technology. Without Commguides, I wouldn’t know how to use any of my technology. It would be impossible to navigate any of it.

Okay we’ve got time for questions and answers, but I think we’ll do that a little bit later.”

[Presenter, Paola, takes a break and then clip resumes as she comes back into view.]

[Host speaks]

“Okay welcome back everybody.

Just during the break I’ve had a question for Paola. I have somebody asking via Zoom. Freida is asking if there’s any courses in relation to becoming a Commguide, and whether you know if they’re available, and if they’re available remotely. So, I’ll hand back over to Paola. Thank you.”

[Paola speaks]

“Thank you, that’s an interesting question. My advice really to anyone looking to get into working as a Commguide is to contact, Able Australia. Many people have begun their careers in Commguiding at Able Australia and there’s a few options, really there’s not one pathway into this sort of work. There’s no formal course either, most people begin with an Auslan qualification, be that Melbourne PolyTechnic or at R.M.I.T, somewhere like that, and then you can also go to Able Australia to have activity training, to sort of partner up with a deafblind person, see how you go.

Really there’s not just one process involved in doing this type of work. You could contact different deafblind organizations to find events that are going on, and you could get involved and have a look that way, and see if the work might be something you’re interested in doing, because we have to remember, that this is not the sort of work you can learn about just theoretically, you have to get involved. You have to do some practical work as well. You can also contact deafblind Australia. They have different information, different contacts that they could hook you up with as well, if you’re interested in that kind of work.”

[Host speaks]

“From Zoom, Freida, says she has Cert. 2, 3 and 4 in Auslan. She knows all about Able Australia, and used to volunteer at deafblind Victoria.”

[Paola speaks]

“Amazing congratulations on your study, that’s really wonderful that you’ve gone through those certificates. Might be worth, maybe, visiting physically, some different deafblind organizations or activities that aren’t connected with Able. Like you mentioned DBV, maybe popping in there, Deafblind Australia. Depends on where you’re located, but you might be able to go visit some spots.

You could also, maybe, use social media or something. There’s a lot of deafblind people on Facebook, you could post on there that you are interested in doing some Commguiding and you want to volunteer to build your skills up. There’s also an organization named Hire Up. They have some Commguides there, but really, I think the best piece of advice I could give you, is to meet with some deafblind people, do some volunteering, and go to some different events and check it out that. That be the next best step for you.”

[Host speaking]

“Thank you, Paola. Frieda says she just wants to get more skills so she can contribute to the deafblind community, and she is getting one-on-one Auslan tuition from June Stathis, and Rosette Busch, but she says to say “thank you for answering our questions.”

[Paola speaking]

“Fantastic, good luck and hopefully we’ll see you out and about in the future.

I might just speak a little bit more about communication guides, or Commguides, because Commguides are really a big part of the deafblind community. It is really important for Commguides to have Auslan skills. I may have mentioned this before, but really, there are 2 types of Commguides.

There is what I would call more of a helper model, and more of an empowerment model. Now as to which one is the best fit, it depends on who the deafblind person is. Some people need a lot of help, some people are more independent,

and they are keener to roll their sleeves up and do it themselves.

Some deafblind people might have additional physical issues, or physical disabilities, and they might need the Commguide to do a lot of, what I would call, helping. So, helping with toileting, or bathing, or ordering food, getting shoes tied, things like that.

There is another type of deafblind person who’s more independent, and really that type of person will prefer to be taught how to do something. Like I showed you before, with the buzz cards, somebody might be confident to communicate themselves in that way, but it is still worth having a Communication Guide there just to keep them safe.

Let’s say, for instance, I go up and I order, and I use my Buzz card, and then the waiter comes along and they bring me a hot drink, but I am not aware that it has been placed on the table. The Commguide could let me know, alert me to where the drink is, so that’s part of keeping me safe.

Really, that is a big part of a Commguide’s role, is safety. So, being knowledgeable about OHS, what to look out for, as far as safety hazards in an environment go. That is really, really important. As I have mentioned before, it is really important when you meet deafblind people, that you don’t assume that we are all the same.

Some people might be very independent some less so, and it’s best in these situations just to ask the person what they need and how they prefer to be supported, but when we’re doing that it’s important that we use language that doesn’t throw the responsibility all the way back onto the deafblind person.

We do not say things like,

“Are you okay? Are you okay? Or can you see me in this? When we use language like that, we are making it seem like the deafblind person is the one with the problem, but really, it is the lack of access that is the problem.

If the access was provided appropriately, it would be easy for deafblind people to be involved, so we can ask these things, and ask for information in ways that don’t disempower the deafblind person or make them feel ‘less than.’ I can tell you one story about when I went for an eye test. I went there and I had these drops put in my eyes, I have to do this every few years just to check the extent of my tunnel vision. Now, back at the time, I did not have a Communication Guide. There was an interpreter there though, and that was great, this is about 10 years ago this took place.

So, I went to the eye hospital, and I got the drops put in and they dilated my pupils, and I said to the Interpreter,

“Can you ask the reception where the women’s bathroom is please?” So, they asked, and then they told me, they said,

“It’s straight down the corridor, then you take a left,” and I thought, “Oh, that’s easy,” straight down the corridor, then left.

So, off I went straight down the corridor, took the left turn, and then hit a step that I didn’t know was there, right before the door, and I fell down the step, and into the door. Luckily, I didn’t have glasses on at the time because I probably would have broken them.

Now this isn’t the interpreter’s fault, again their job is to interpret what the receptionist said, and that’s what they did, but thinking back, I should have had a Communication Guide with me because, even though the Interpreter, interpreted what was being said, it wasn’t all the information I needed. There was an important extra piece I needed to know about the environment to keep me safe, but even when you have a Communication Guide, they’re not with you all the time.

We might not have enough funding to pay for every time we need a Commguide, or they might not be available when we book. I learned from this experience, that in the future I also have to take responsibility for asking for the information I need. So, I could ask the Interpreter, “where is the toilet?” And then say, “is there anything else I need to know? Are there steps? Is there anything that could be a danger or a threat to me?”

We can’t always think we can rely on Commguides. We need to take a little bit of personal (pardon me), accountability for our safety, and it’s really important that people who work with deafblind people, or people out there in the community understand the difference between Communication Guides and interpreters.

I can talk a little bit more about technology in this space.

You might recall, earlier in the presentation, I was talking about some apps and programs that deafblind people have issues with using. I owe a massive debt of gratitude to the Commguides, that show me how to use these programs, but it’s also important that people running meetings, on online platforms, know how to do so in a way that works for a deafblind person.

So, very simply this could start with things like, having a dark

background, making sure that the interpreters are appropriately attired, and that they have long sleeves, dark clothing, with no logos or patterns on it. It’s important that the interpreter’s clothing contrast to their skin colour, and that they have good lighting where they are as well. You want the lighting coming from above, or off-centre, ideally not blasting right up in the face.

It’s also important to make sure that the onscreen interpreter is close enough to their camera so as not to appear very far away. We really only need to see the top half of the person’s body if they elevate their signing space slightly. Also, when the interpreters need to swap over, they will often let you know, “okay we’re going to have an

interpreter swap,” but online, the deafblind person will then need to pin the second video, the second monitor.

In the deaf community, these sorts of online things tend to just steamroll along at an excellent rate of knots, but if you’ve got deafblind people in your meeting, you need to allow that extra time for the people to pin videos that they need to do. It’s also important in meetings like this, to let the people know who’s speaking, when they’re speaking, and this is quite a difference between the deaf and deafblind community.

In the deafblind world you can’t really catch who’s speaking on screen, so it’s important to say your name before you contribute, so that any deafblind people can follow what’s happening.

It’s as simple as just saying,

“Ben speaking, look I think this…” or

“Janet speaking, I have a question…”

Sometimes the Commguides will also let me know who’s come into the meeting. They might let the deafblind person know where the audience’s attention is directed, as well. Different pieces of environmental information like this come via the commguide, that could be like sounds in the room, people coming in, and out, things like that.

Another thing I should mention. In the zoom waiting room, for people who have difficulty seeing, such as someone with Usher Syndrome, let’s say I’m in a zoom waiting room, I don’t have a Commguide with me, because they weren’t available. I might be looking to see if the Interpreter has arrived. When the Interpreter arrives (and apologies folks, this is for a physical waiting room not an online one), so if I’m in a physical waiting room waiting for the Interpreter, often what happens is the Interpreter will arrive and say,

“I’m here for the deafblind person,” and if they know me, they might come up at this point to let me know that they’re here. They can place their hand on my shoulder, or on my arm, or on my leg.

It’s important that they place their hand, but don’t tap. If you tap, then I can’t orient you in the space. I don’t know where you are every time your hand comes off me, but if you place your hand and leave it there, either on my leg or my arm, I can follow the line of your hand, and your arm up to where you are, and orient you in the space.

In the case of an interpreter who’s never met me before, would be a good idea that they got there early enough to be able to have sort of a warm-up conversation with me. That way I can let them know about my communication preferences and what I need. I can ask them to take their rings off for example, or let them know that I need you to sign slowly.

It’s a good point actually, rings and jewellery are really distracting visually, so always best if interpreters aren’t clad out in earrings and rings and things of that nature.

I think it’s really important for organizations like the NDIS to improve their understanding over what it is that deafblind people need. The NDIS is really impossible to navigate at the moment, but we rely on it for our funding, for interpreters and Commguides. That is our Lifeline, to being involved in the world, to being involved in the community.

Unfortunately access to Interpreters and Commguides for deafblind people, is not as easy as it is for say, the deaf community. I might get a NDIS package that has plenty of funding for Commguides, and interpreting hours in it. Might have a good allocation for, let’s say classes and training in how to use my technology. Might also have funding included in it, to make my home safe, and accessible, to make modifications to the lighting, or to the physical layout of the

home, and these might be modifications to do with access or safety, making sure that the house isn’t too dark for instance.

So, a plan like this would include everything that I needed to not feel disabled. Unfortunately, we know that NDIS has this big fixation on reasonable and necessary supports, but they don’t understand the extent of support that is required for a deafblind person. They also don’t understand the impact that not accessing information has on deafblind people.

I really think the NDIS could benefit from a more holistic understanding of the deafblind experience. Same could be said for interpreters. Most people just focus on their Auslan, making sure that’s great, but they don’t think about all the other aspects of their job: their clothing, their positioning, their attitude, how they ask questions, what language they use, all these things, can have a big impact.

Some people will be offended if you ask them, “can you see me?” It’s the same as asking a deaf person, “can you hear me?” It’s like going up and saying, “can you breathe?” It’s like, get out of here, stop throwing it all back on me!

So, I think the NDIS need to improve their knowledge of deafblind people, as do the interpreting practitioners among us, and I think also, councils and people like that, particularly if they’re doing road works.

It’s really important to let deafblind community members know that this is happening. Ultimately, it all comes back to as simply as saying, ‘deafblind people need information,’ and that’s why you need things to be appropriately coloured, you need things to be appropriately positioned, it’s all about

access to information, and ultimately the safety

that comes from that.

It’s about making sure that everyone has the right colours they need to be able to see things, making sure that the interpreting workforce is big enough to support the people that need it. I’m hoping with the recent announcements to the free Tafe courses changes that. We will finally grow the Interpreting workforce for deafblind people, but we also need for the Commguide workforce to grow, and for that workforce to grow the Auslan skills are of primary importance.

I’d just like you to cast your mind back to the type 3 Ushers that we talked about earlier, where people have a very sudden, and immediate vision and hearing loss. This can have quite a profound impact on people’s mental health. They find themselves, all of a sudden, having to engage with medical personnel and all sorts of different things.

I would reiterate again, that I think learning Auslan is so, so important for people in that situation. It really is just one of those vital skills, even though it mightn’t seem like it, acquiring that skill, will at least give you the opportunity to communicate with other people after your hearing has completely declined.

If anyone’s got any questions don’t be shy, I’m more than happy to answer anything that anyone would like to know more about.”

[AI voice speaks]

“Is there a hereditary link to Usher’s Syndrome?”

[Paola speaks]

“I’m glad you asked me that question. That’s a really important bit that I left out. You are correct, Ushers is a genetic condition. It’s interesting, in my family there’s no precedent, there’s no one in other generations, but I met a geneticist once, who was saying that the weakness in the gene often comes through the father’s side. They can have a genetic weakness that is passed from generation to generation. In my family that doesn’t seem to be the case. So, the answer is that yes, it is, but in my family no, it wasn’t.

[Host speaks]

“Freida has asked,”

“Will you be talking about haptics today?”

[Paola speaking]

“Oh, I’m happy you asked me that. This is great, see, there’s a lot of things I’ve left out of the presentation, but it’s come up in the questions, so that’s good. For me I didn’t know anything about social haptics, until about 3 or 4 years ago when I went to a presentation. I had a presentation at the NAB, and there were two interpreters there, and one of them offered to use haptics with me and I said,

“What is that? I don’t know what that is.”

They said,

“Look, we can do some different gestures on your arm to let you know about what’s happening in the audience. For example, we can let you know if people are laughing or I can let you know if you’re speaking too fast, and you need to slow down. All these different cues, different ways we can communicate to let you know what’s happening in the audience. We can let you know if they’re bored, if they’re excited, if they’re looking around the place, or if someone has a question, so it’s a way of positioning another interpreter there to give you this information.”

This presentation, at NAB, was my first time using it. It was such a huge auditorium, maybe, I don’t know, 150 people there, so I couldn’t see if my jokes were landing, or if people were engaged in what I was saying. Having this ‘haptic’ feedback was fantastic.

It can happen either on the upper arm or the back. There’s a variety of ways to do it and it was a very new concept for me, but a very helpful one. It means that while I’m presenting, I can get feedback like; you need to slow down, or feedback about what’s happening and I don’t need to interrupt my presentation. This is really exploding and it’s used quite a lot in the community because it works for people, kind of regardless of the extent of their vision impairment, so it’s really popular.”

“Janet speaking.

Do we have any other questions online or in the room?” [pause, while waiting to see if there are any questions].

“Thanks James.”

[James speaking]

“A lot of things now are becoming user friendly for everybody, such as mobile phones. For myself that’s blind, I use my mobile phone as a tool. What’s your thoughts on the NDIS, when they don’t let people purchase phones, as they are an everyday device. Where a lot of blind people, deafblind people, use their phones as accessibility tools.”

[Paola speaking]

“It’s a good question, and an interesting point James. It’s definitely something I’ve had to advocate for, a lot, needing a phone for a variety of reasons. If I could draw the comparison

with the deaf community, some people have received funding for an iPad, or a phone, and I had to explain that I can’t use an iPad, because I only have functional vision in one eye. It’s too big to be useful for me, and I’ve explained that I need a phone with good resolution, a high-resolution screen that I can actually see, and I make the argument that this is part of my accessibility needs, because, let’s say, information is being released, like about a bushfire or about Covid.

This is the only way that I can access that information. Other people might be able to listen to the radio. They can listen to a podcast. They can hear people around them. For me I’ve got one way to access that information, and that’s through my phone.

So, it becomes a vital access-ability link here. There has been a little bit of back and forth, and argument with the NDIA about this, because they don’t necessarily accept my rationale for wanting the phone, but eventually it was accepted, because they understood that I could not use the iPad, and that there was a lot about what I needed the phone for, and the characteristics of the phone itself, that meant that was the right device.

You know, another thing, is that I can’t always have a Commguide with me all the time, and I need to feel safe and

the phone is an important part of that. Really, it’s about your rights to safety, your rights to access the information, and your ability to understand, and connect with the world around you during your everyday life. I think it plays a really important part in that. Did I answer your question, James?”

[James speaking]

“Oh, yes, thank you.”

[Paola speaking]

“Most importantly of all, if there’s one takeaway, If, you want more information, about anything you’ve learned here today, make sure that the information is coming from a person with lived experience. Some courses like what we’ve done here today, are taught by hearing people and they don’t always cover what deafblind people think it’s important for you to know.

I think it’s important that any information you’re getting comes from a person with lived experience, and I would really encourage you all to get some practical experience in the deafblind world, as well. If you have an opportunity to attend a deafblind world workshop, that’s great. It’s great for developing empathy. They’re activities in those workshops where you can experience a little bit of what it’s like to be deafblind and I think you would be quite surprised by your response during those activities, but it’s a great way to help you better understand what it’s like to be without vision and hearing.

I would really encourage everyone here to go and do a deafblind world workshop with Deafblind Victoria if you can, but other than that, thank you for being here.

Thank you for listening to my presentation.

Thank you very much everybody.”

Paola Avila’s email address contactipaola@gmail.com

This project is funded by the Australian Government Department of Social Services go to dss.gov.au

Technology Products, by TachTech

Description: Michelle appears on the left side of the screen, wearing a light grey sweater as she gives a speech. On the right, an interpreter with short hair signs in Auslan against a blue background and switches with another interpreter during the video, who is wearing a dark blue shirt.

(Screenshot of Michelle, left and interpreter, right)

[Michelle speaks]

“Welcome everyone to our presentation, proudly brought to you by Deafblind Australia, and also TachTech which is my business, that I provide one-on-one training.

I want to welcome everyone. Before I start, I must recognize, that we are on the land of our traditional owners. I respect Elders past, and future, and respect the land, to whom we are now on.

I want to welcome you today to our presentation. For those who can’t see what’s happening, I’ve got in front of me, various computers that I hope to show you, and

hopefully encourage you, pardon me [cough], encourage you to actually explore. As well, I’ve got a refreshable Braille Display in front of me, which I will show you in a minute.

I want to also dedicate this workshop today on behalf of a good friend of mine who’s recently passed away, Andrea Sherry, who actually got me started in computers and a lot of work time Andrea spent with me, giving me these, towards some of the knowledge that I have today. So, I want to say thanks Andrea.

Today I’m going to show you a couple of platforms or a couple of different Windows environments. In front of me here, I have a computer which is a Windows HP computer. Quite fast, and also, I have, as I said before a Focus 40, 5th gen Bluetooth and also USB Braille Display refreshable Braille Display.

I want, I’m using my main screen reader on my computer here called, ‘Jaws.’ That’s J A W S. It doesn’t mean that the shark going to attack you. No. It actually stands for Job Access With Speech. JAWS has been around for quite a while, and I’m running JAWS 2022 latest version at the moment. I will just kick JAWS up open for you, so, you can actually have a look, and also, if you are able to hear, have a listen to the synthesized voice. A screen reader basically is what people see, and here on the screen you can actually produce, with either speech,

if you have hearing, or even if you got a little bit of hearing.

I’ll show you a device in a minute, have a little bit of hearing. You can actually produce what’s on the computer, via either Braille. For instance, I will just call up my desktop in braille at the moment.

[AI voice speaks]

“folder view

list,

view

recycle bin, 116.”

[Michelle speaks]

“On my Braille Display,”

[AI voice speaks]

“To end the selected item, press f2.”

[Michelle speaks]

“Thank you. On here, on my Braille Display, I’ve got

‘list view folder,’ and I got my recycle bin, one of 16 shortcuts on my desktop. For instance, if I want to choose a particular item on my desktop”,

[AI voice speaks]

“Zoom 2 of 16,”

[Michelle speaks]

I can either go to Zoom,

[AI voice speaks]

“JAWS 2021”

[Michelle speaks]

“I’ve got an earlier version of JAWS over there…”

[AI voice speaks]

“Microsoft Edge.edmp Brave fair 3.0.

[Michelle speaks]

I’ve actually, I will show that in a minute, I actually have a program that will help to teach you or teach people how to work JAWS. JAWS is actually the main leader in screen reader technology. I said before that I, you can actually, it doesn’t matter if you, have a little bit of hearing, you can still hear the voice, and I’m using a

little device here called a Streamer. A streamer connects without any wires or anything like that to your hearing aids.

If you have hearing aids that are Bluetooth enabled, you can actually, turn your hearing aids to the streamer, and the streamer can actually pick up the voice. Now I’m going to actually make the computer talk. I will hear it through my hearing aids, but you won’t hear it of course, because the speech is off. I’m just going to turn my hearing out, 1, 2.

Okay, I’m just going to now see if I can get the voice to go through my hearing aids, and when you want the thing to work, it doesn’t!

(Screenshot of device)

Ah, okay it wasn’t switched on. Okay. I’ve actually, have my hearing connected to the streamer, and the streamer can actually tell you what’s happening. It doesn’t mind, it

if you can’t hear, you don’t need the voice, you can actually control JAWS completely by Braille.

Now for those people who don’t know braille, there’s a program called Fusion, which is actually, you can control a Braille Display, and also you can control the screen magnification, all in one program.

People asked me JAWS, yes, is quite expensive, but I don’t have any money, or I don’t get a NDIS that will

cover me for JAWS. How then can I work a computer with a screen reader?

Well, there’s a free screen reader that is okay. Not my

most popular screen reader, but it certainly does work.

I’m just going to turn JAWS off for you.

[AI voice speaks]

Unloading JAWS

[Michelle speaks]

“I’m just going to start now, what’s called NVDA. That’s called, Non-Visual Desktop Application. Okay. Now NVDA is now launched, and I’ve also yes, I’ve got that connected to my Braille Display and you can actually download NVDA, and that’s something that I can actually help you with, if you don’t have that sort of um technology, or information. With NVDA, you can also, you know, you can actually read documents with it, your website etc.

Let’s perhaps, go to something like Google. Just something very quickly, ‘Run Windows Run.’ I didn’t realize I still had my hearing aids connected. I’m just waiting for the, I’m not sure, actual fact, we’re not connected to the internet, are we? I’ve just realized that, but you can actually…

[AI voice speaks]

run dialogue type, the name of a pro.

[Michelle speaks]

So, you can actually, when you’re connected to the internet, because where I am at Ross House, I’m actually where I work in the office. We’re actually a little way away from the office, so I’ve got no internet here at the moment, although I could if I wanted to, connect my iPhone with the laptop so I can actually have internet connection.

I just want to turn voice over, off, for a minute, and just show you for a second, a screen reader that comes with a program, with Windows, called, ‘Narrator’. I’ll just turn voice off for a minute, for you.”

[AI voice speaks]

“Exit NVDA dialogue.

What would you like to do?

Combo box exit collapsed

alt plus d okay button, okay.”

[Michelle speaks]

“Just told NVDA to exit the program. Okay, now let’s go to Narrator. Narrator is a free program, that can come with Windows, however, I believe that braille support is, you can actually access braille support, but what happens is, if you want to use other screen readers, the Narrator does tend to take over the drivers.

So, let’s turn this on for a moment.”

(Screenshot of Michelle’s monitor)

[AI voice speaks]

“Narrator dialogue, okay button, alt plus, heads up don’t show again, check box unchecked, all space checked, turn off narrator, okay button, narrator heading, level one. Welcome to Narrator.”

[Michelle speaks]

“Okay, now I’ve got Narrator, as I said you can actually…

[AI voice speaks]

FS reader 3.0,

[Michelle speaks]

…be quiet. Okay, you can actually connect the Narrator and you can actually use Narrator. It gives you basic information with Narrator and Windows, however I strongly suggest however that JAWS or if you can’t, if you don’t have funds for JAWS, I think something along the lines of NVDA, is quite good. I just going to close Narrator for you for a minute, and just show you some features of JAWS.

I just want to show you a feature of JAWS which I think is really cool. You can actually run a training program called FS Reader. FS Reader is a program that you can actually, will teach you, how to navigate around your computer with JAWS. There’s also other programs you can use, as well, with NVDA, and I think actually the browsers that you use, does give you that access.

So, let’s how about we go into FS Reader and let you have a look at how this works. I’m just opening FS Reader here, and FS Reader is now opening. If this, I wanted to find out if everyone is following what I’m saying. I hope I’m not talking to fast for interpreters, but I hope everyone is happy and is following what we’re saying. Actually, so, can we find out if everyone’s happy?

What information giving, or whether perhaps there’s something that’s really, gone over your heads, and I need to maybe backtrack and explain?

Okay, I actually, now have I’ve got voice over here, where I’m actually, where…

[AI voice speaks]

“Log in failed…”

[Michelle speaks- jokingly to computer]

“You would have to, wouldn’t you?

[Michelle speaks to viewers]

So, you can actually use voice over the on the Mac etc, and actual fact it’s a very, very, different system to JAWS, that I was showing you just before. Also, you can actually use a screen magnification that comes built in with Mac which is something that you could use if you can’t it’s a little bit like the screen magnification that you can use with your iPhone, which is something I actually, people use very successfully, Ushers as well, use very successfully you can actually, you can actually change the iPhone or the Mac, to whatever background colours etc you have, however, if your vision is starting to deteriorate you can actually use your Mac with connected to a refreshable Braille Display.

So, that’s 1 thing I’m just going to see if I can log in, so I can actually show you, just going to see if I can log in.”

[AI voice speaks]

“…tips, see what’s new in Mac OS Monteray. Discover new way…”

[Michelle speaks]

“Right. Thank you.

I’ve got I’m actually in. What I will do is, all you can actually, pick various programs from your desktop

[AI voice speaks]

“Preview has no windows.

Preview has no windows

Safari has no windows.”

[Michelle speaks]

“I’m just going to close that. Let’s have a look, so you can actually pick whatever program, on the Mac.

(Screenshot of the ElBraille)

I just also want to show you a computer that has been adapted specifically for blind people and it’s called the ElBraille. I’m just going to have to put my ElBraille back into the. I just wanted to show you some features of the

ElBraille. I’m just going

[AI voice speaks]

to space,

[Michelle speaks]

I’m just going, ElBraille is like a little computer, that is, actually, works Windows 10, and they’re actually now developing the same computers for Windows 11. I failed to say that on my lap laptop here. I actually have Windows 11, working with JAWS 2022, and last night, one reasons why I’m having a little bit of trouble with my Mac here. I haven’t used my Mac for quite a while, and I was busily updating to the latest version of Mac. So, I haven’t had time to actually fine tune it for today. But at least you get some idea, that you can actually plug a Braille Display, with voice over, on the Mac, and that actually comes free with the Mac.

Where with Windows, you have to, if you want JAWS, you have to pay for it, but I actually put this, is only a personal view, and I don’t want to influence anyone. I really do prefer JAWS ‘cause I think you, I think once you set up for you can learn to do so much with it, but at the same time you can also learn to adapt, and weave JAWS through to your own personal choices and preferences.

So, this computer here, that I’m using here, is actually,

and I think we have we gone to sleep. I’m just going to see we’ve gone to if we’ve gone to sleep. I think we have gone to sleep. Okay, with the braille computer it, this is called an ElBraille, E L B R A I L L E. Now, one of the features about ElBraille, is the ElBraille can actually work just like a Windows computer, however if you, are a…”

[AI voice speaks]

“See what’s new in Mac OS Monteray, discover new…”

[Michelle speaks]

…if you are a braille reader you can actually use ElBraille, if you are primarily a braille reader. If you can’t read braille, the ElBraille is really not for you.

I’m just going to see if I can turn this on. One of the differences of ElBraille compared to the other 2 computers, it doesn’t have a screen ‘cause, mostly for blind people you don’t really need a screen, however the, I think one of the downsides to this being that, if something happens, you can actually put a monitor to this actual computer. I just wanted to show you before we run out of time, and can someone tell me how we’re going for time, please?

I just wanted to show you the FS Reader that we spoke about a little while ago.”

[AI voice speaks]

“List view not selected, recycle bin.”

[Michelle speaks]

This program is actually run by JAWS, where it can give you, not only audio instructions, but you can actually you use the FS Reader training package that can help to get you started with JAWS. So it doesn’t matter if you can’t hear, you can actually use braille in whatever code braille you want to, you want to read.

Look today is only just a very, very, brief introduction. I can’t go into deep information. This is something that I’d be very proud to present to you, if you want to follow up at a later time. I will also give you contact information, so you can actually in braille, and also large print, which I’ll send through to Janet to give to you. So, if you want to contact me, send me a text message, if you can, or if you want to send me an email. If you don’t have any of those features, I’m sure hopefully, that either you can contact me through deafblind Victoria, or you can contact me through my business, so I will give you information on how to contact me.

One thing I did meant to say, that I have actually forgotten about, is that I was born blind. I lost my hearing due to, I had severe ear infections as a child, and I actually grew up blind, but I lost my hearing. I use a Baha on my right ear and a cochlear in my left ear, which I haven’t got off today, because I’ve just got so many other things going on in my brain at the moment. So, I’m very happy to give you the floor so you can ask any questions. I know, maybe there’s things that it’s gone over your head. I don’t know but I’m very, very, happy to make sure that you can sort of fully aware what’s happening.Thank you.

[AI voice speaks]

“Can JAWS magnify documents or recognize bank notes?”

[Michelle speaks]

“Yes, it can. Yeah, not JAWS itself. There’s another program that has JAWS and a magnifier program already built in called Fusion. F U S I O N. Fusion, that come from the same company who makes JAWS, but also too, one thing that is really good about Fusion is that you can actually use braille when your sight starts becoming worse. You can actually then use Braille Fusion, but you can actually use Fusion that has magnification program built in. There’s also, there used to be a program called ZoomText, Z O O M T E X T, however, I think now, mostly a lot more people are using Fusion. That’s also something I could help you with, but JAWS itself, doesn’t have a screen magnification, but Fusion has voice, braille and magnification.

[AI voice speaks]

“I have Usher’s syndrome. Do I need to learn braille?”

[Michelle speaks]